It’s not new anymore to generate images from text using deep AI. There are various models proposed for this. OpenAIs Dall-E, MidJourney or Stable Diffusion are just some examples.

It started as a trend when GAN created great images with ease. It used a discriminator network to create images.

One of the new kids on the block is AuraFlow (AF). What makes AuraFlow so attractive is the open licensing. It’s still considered beta as the version is no more than 0.1. It is completely open source so easy to get started. The diffuser model is hosted on huggingface site. Also downloading the model does not need you to login.

One of the gripes for Stable Diffusion (SD3) is the restrictive licensing. You will have to provide your information and agree to a non-commercial licensing. Although this is good enough for educational purposes, many people found it was too intrusive and against the open AI model. They always thought that open source AI is in jeopardy.

What is Stable Diffusion?

Stable Diffusion is modeled by a company called Stability AI. It was started by Emad Mostaque in 2019. Stability AI is based in London and San Francisco and as a startup it was one of the companies that was able to get a lot of funding from different venture companies. Its rise to prominence is of course Stable Diffusion which was touted to be open source but was licensed under a more restrictive licensing that open source gurus would prefer. Current version is 3 and the model in our test needed very less memory and was easily able to fit in our GPU.

What is AuraFlow?

Like we said, AuraFlow is the new kid on the block. It is released by a California based company FAL AI which was started in 2021 by Burkay Gur and Gorkem Yurtseven and licensed under Apache 2.0 licensing terms which is very generous. It is still in beta with a version number of 0.1 and may not be stable to run. Also the model itself is huge, and in our tests, it looked for 18 GB of GPU memory to load fully. I was not able to load it up on a 12 GB GPU.

Introductions out of the way, we will try to get some basic tests done with each of these models. I will start by introducing small code chunk that I used for running each of these models. There is nothing fancy in any of these codes. During the first run, they will download the model and then eventual runs they will only use it to build images based on provided prompts.

Let’s talk about Huggingface diffusers

We randomly brought up the point that we will use Huggingface diffusers. So what is it? To quote HuggingFace, Diffusers is the go-to library for state-of-the-art pretrained diffusion models for generating images, audio, and even 3D structures of molecules. Whether you’re looking for a simple inference solution or want to train your own diffusion model, Diffusers is a modular toolbox that supports both.

So, to put it simply, it is a library that Huggingface provides that helps in saving pretrained models for use by other programs. We will just use couple of these models for our tests.

Get our tools ready

$ python -m venv vhugger $ .\vhugger\Scripts\activate.bat $ pip install wheel $ pip install transformers accelerate protobuf sentencepiece $ pip install git+https://github.com/huggingface/diffusers.git

The first thing we did was create a virtual environment using pip. We installed diffusers library from github. We then activate it and add libraries as mentioned above to it. Now let’s start creating the main programs. The programs look very similar and very short.

Let’s start with the one for AuraFlow (AF).

import torch

from diffusers import AuraFlowPipeline

pipeline = AuraFlowPipeline.from_pretrained(

"fal/AuraFlow",

torch_dtype=torch.float16

)

pipeline.enable_model_cpu_offload()

prompt = "A high resolution photograph of a sloping ridge with ..."

saveFile = "./images/aura.jpg"

image = pipeline(

prompt=prompt,

height=1024,

width=1024,

num_inference_steps=25,

guidance_scale=3.5,

).images[0]

image.save(saveFile)Stable Diffusion (SD3) is not too different,

import torch

from diffusers import StableDiffusion3Pipeline

from huggingface_hub import login

#login()

pipeline = StableDiffusion3Pipeline.from_pretrained(

"stabilityai/stable-diffusion-3-medium-diffusers",

torch_dtype=torch.float16

)

pipeline.enable_model_cpu_offload()

prompt = "A high resolution photograph of a sloping ridge with ..."

saveFile = "./images/stable.jpg"

image = pipeline(

prompt=prompt,

negative_prompt="",

num_inference_steps=25,

height=1024,

width=1024,

guidance_scale=7.0,

).images[0]

image.save(saveFile)There is not much of a difference in these codes. The reason being both of them were using diffuser library framework. Like I said before, Stable Diffusion license is a bit more restrictive. So, we have to login to get the models. Before that we have to register with huggingface so we can get an API key. We need this the first time only. From next time onwards, application will use the cached version.

Compare output

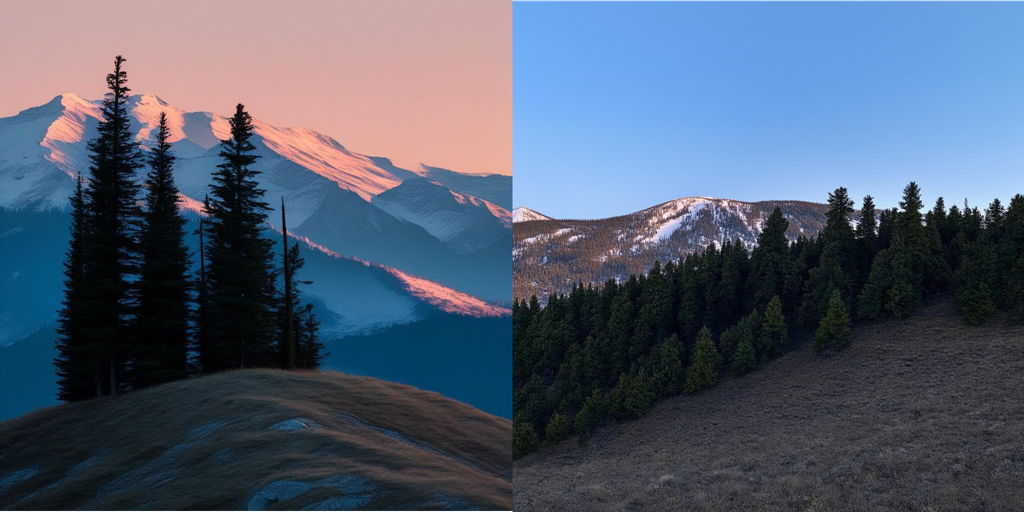

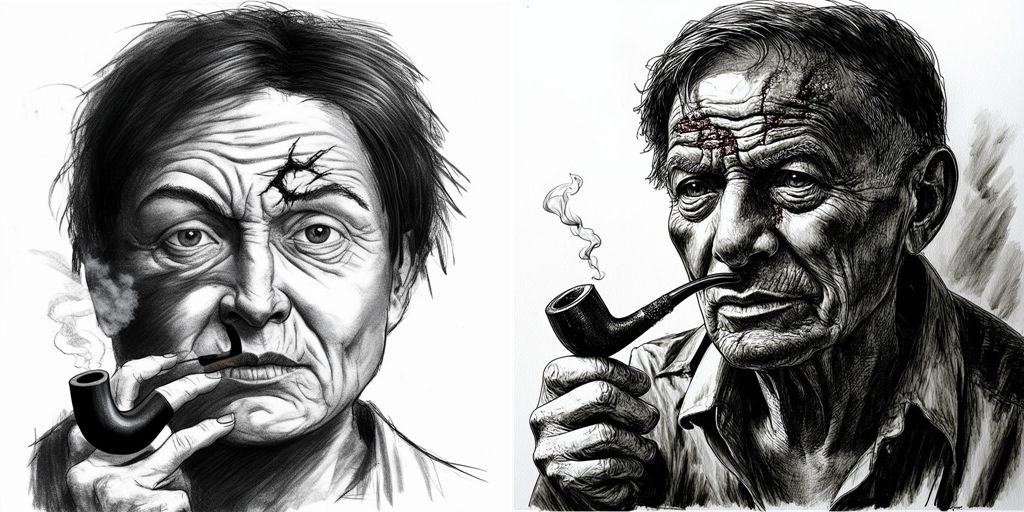

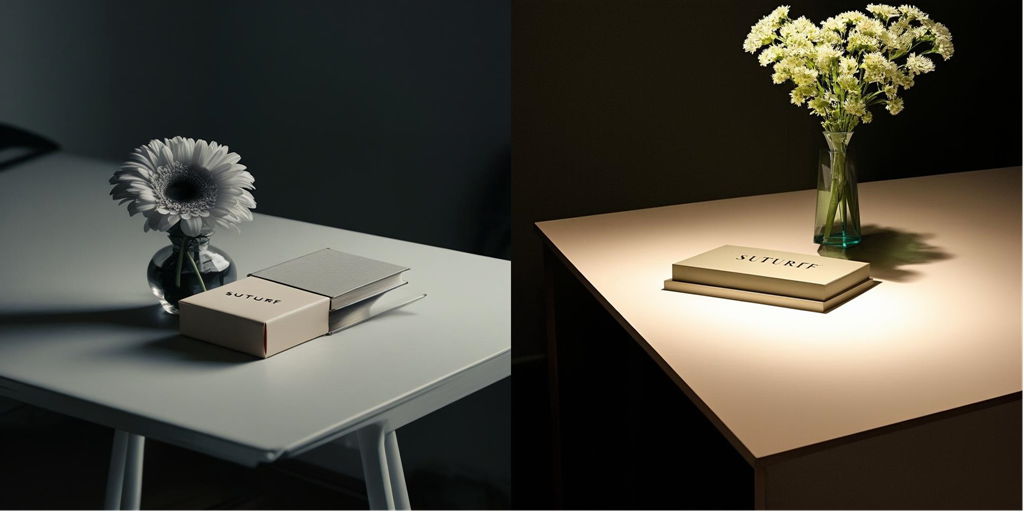

Next on, let’s run some tests on some random generations and compare output. In all the outputs below, left side is always AuraFlow and right-side image is Stable Diffusion.

I don’t think it is fair to compare these based on just a few outputs, especially when most of the time for AuraFlow I only had one output generated.

Basic Realistic Photograph

Prompt

A high resolution photograph of a sloping ridge with tall pine trees at sunset. Images of snow capped mountain visible in the background.

In this case I think both of them did bring out the ridge, SD3 showing more of it than AF. But considering the sunset itself, I think AF did a better job of representing the mountains. Overall I am not inclined to either, so, I think this one possibly is a tie for both.

Watercolor

Prompt

Water color style picture of snow capped mountains behind a clear blue sea with lush green forests in the foreground on right. A small yacht can be seen in the foreground. Time of day is sunrise.

This one definitely tilts a lot towards AF. I think the brush strokes that AF did is way more realistic than what SD3. I am not sure what that yellow semi-circle AF tried to draw is, but removing that would give me a much better water color painting than SD3. I would say AF takes the medal in this one.

By the way, remember what I said about not judging based on one image? The image on top of page is also drawn by SD3 and uses the same prompt. The bold strokes on that image is definitely way better than what it created in this one. It would be a difficult selection if I had to compare with that image.

Impressionist Style

Prompt

Impressionist style painting of a street illuminated by lamp posts at night. There are trees on both sides of the street. It is raining and the street is reflecting the light. A girl wearing a blue frock is seen at a distance with an umbrella.

Wow! Don’t they both look pretty? I think SD3 in this case has the reflections and light much better than AF. Unfortunately the girl is not holding her umbrella, not sure who is. AF is lot subdued, and some of the lights further down is a little bit messed up. I would probably give this one to SD3 even with the flaw of holding that umbrella.

Charcoal Sketch

Prompt

Charcoal sketch of a person’s face with a lot of wrinkles. There is a big scar on the face. The person is smoking a pipe.

In this case both of them did a nice job with the face, though I do like the shade done by SD3 better. I cannot say the same for the pipes. AF converts a finger to a pipe – so eventually the man actually has a finger in his mouth. For SD3, who ever heard that pipe is smoked from under the nose? I think if I correct the minor flaws, I will still try to correct the one SD3 created. So, a point more to SD3 for this.

Manga Style

Prompt

A Japanese manga type image with a female ninja dressed in blue carrying a sword fighting a male ninja dressed in red carrying a mace. They are standing in the middle of a bazaar. We can see the sun setting in the background.

Both of them did what I wanted them to. Unfortunately AF drew a lot of weapons that I have no clues where they came from. I do like that it has signs in Japanese on the boards. But overall SD3 is much cleaner. My only gripe here is that the mace is not fully drawn. I like the ninjas much better for AF, so overall I will probably have AF tied with SD3. Both did a fair job of this prompt.

Spatial Judgment

Prompt

A rectangular table with a flower vase on top of it. On the side is a small rectangular box. On top of the box is a book with ‘SUTURF’ written on it. Environment lighting is moody.

This is always difficult for any renderer to draw. I did ask the book to have a title of SUTURF. In case of AF, the box has this. The book though is well defined, but not on top of the box. Lets see what SD3 did. The layering was good. But is the top one a book? Cannot say. Is the bottom one a box? Again, cannot say. If I look closely, I would think the bottom one is a book and the top one is a box. So, it messed up the order. From lighting perspective, I think I like SD3. I would tend to give SD3 an edge on this too, but again not by much.

Conclusion

Those are just some sample images generated by the two tools. Remember one thing though, SD3 is a stable library by now, while AF is just in beta. Considering that I would say AF is an excellent library to try out. It is still way more GPU hungry and performs way slower (as in on my PC, a SD3 image rendered in less than 2 minutes versus AF image taking 23 minutes. That is a lot slower. I am sure as days goes by AF is going to be a strong contender to all of the rest of similar tools.

Just to reiterate, this is just my view after trying out both libraries. And I have generated a lot of images on SD3 and very few on AF (because it takes so much more time). So, there may still be some biasness towards SD3. Keeping all of those in consideration, and knowing that comparing one or two images just does not give a good result, consider this a comparison that is just a view of one person. You can run your comparison and use both the tools to come up with your view. And of course the images are present in this page for you to view and compare. The only thing done to the images is resize by half.

Hope you enjoy this comparison. You can leave your comment on what you think. Ciao for now!