Today we will talk about Natural Language Generation (NLG) and understand how to implement a Markov chain model in Java. I will not use any external libraries, and the code is really just a brain dump and not really a production ready code. But it will definitely illustrate how we can approach Markov chain to generate sentences based on a dictionary created from pre-defined content.

NLG is a part of Natural Language Processing (NLP). NLP deals with linguistic processing or in other words processing languages. Traditionally natural language generation works in the following way,

- Read different contents, process them and build a corpus of the data. This can also include the type of message it conveys, emotion of the message etc. So, for example we may tag a message for Astrophysics, Astronomy, Moon etc. So, when content generation is needed in one of these context this content will be picked up for processing.

- Now NLG algorithm will read through all of these contents and create some kind of relation between the words defined in the content.

- Store this data in a computer understandable form so that we can readily access them for future language generation.

- Generate texts based on definition, type and context of information needed.

Use Cases for Natural Language Generation

One of the best examples for NLG is next word prediction. When you type something on the phone, you will keep on getting three best matches for next word. You can select one of these words, or you can key in a different word. This is NLG in action. NLG is also used for text generation in blogs, articles and news reports. A lot of people use the power of NLG to generate content for their blogs.

The third major use case is conversational AI. We use conversational AI in a lot of domain including customer support scenarios.

Algorithms used in Natural Language generation

Next we will discuss the different algorithms that we use to solve this problem

Markov Chain

Markov Chain is one of the earliest algorithm to generate sentences. It reads relation of words in a sentence and creates a map for the words. Finally it uses this map to generate sentences. This is more of a next word randomization algorithm based on known corpus. Phone is one of the key devices where you see this algorithm used.

Recurrent Neural Network (RNN)

The previous algorithm was more of a random word selection. However, RNN uses the content to train a model and assigns probabilistic scores to each word in the dictionary. It will then select the best word based on the context and the score. However, for longer sentences, the context may be lost and sentences may start getting hapazard.

Long-Short Term Memory

LSTM theoretically is an extension of RNN. It solves the problem of maintaining context of the sentence even when generating a much longer sentence.

About n-grams

Before we start the main implementation, let’s spend some time understanding n-grams. N-grams is sequence of n adjacent words or letters from the provided set of text.

Let’s take the standard sentence.

The quick brown fox jumps over the lazy dog

If we want to get all the bi-grams or 2-grams for this sentence, we will get the following.

The quick quick brown brown fox fox jumps jumps over over the the lazy lazy dog

So we can generate 8 bigrams from a sentence having 9 words. Let’s see how many trigrams or 3-grams we can make from it.

The quick brown quick brown fox brown fox jumps fox jumps over jumps over the over the lazy the lazy dog

So, we can generate 7 trigrams. So, for a sentence consisting of K words, we can generate K – (N – 1) N-grams.

You may ask what are these used for? N-grams are used for maintaining relationship between words in a sentence. You can create a probabilistic model for the word occurrence. Markov chain uses this model to implement the algorithm.

For our application, we will build the Markov chain model based on trigrams.

Generating Trigrams

For this example we will extend an iterator to keep getting sets of three words.

public class TrigramGenerator implements Iterator<String []> {

int currPointer;

final int wordLen;

final String[] words;

public TrigramGenerator(final String txt) {

currPointer = 0;

words = txt.split("\\s");

wordLen = words.length;

}

@Override

public boolean hasNext() {

// Will always need three letters

return currPointer < wordLen - 2;

}

@Override

public String [] next() {

final String[] arr = new String[3];

arr[0] = words[currPointer].replace("\"", "");

arr[1] = words[currPointer+1].replace("\"", "");

arr[2] = words[currPointer+2].replace("\"", "");

currPointer++;

return arr;

}

}Let’s analyze what we are doing here. We splitting the text string received in the constructor and storing in an array (Line 7). Next we have implemented the methods for Iterator. Method hasNext on line 14 checks if we still have at least three words left to return.

Next method on line 20 returns next three words from our word list. Finally it increments the counter by 1 so that next time we do not get the same sequence.

So, let’s run this against the sentence below and see what our output is.

Blue skies shining at me, nothing but blue skies do I see.

Output looks as follows:

Blue - skies - shining

skies - shining - at

shining - at - me,

at - me, - nothing

me, - nothing - but

nothing - but - blue

but - blue - skies

blue - skies - do

skies - do - I

do - I - see.

One thing to note here is that we have not done any data cleansing in this process. However, we will cleanse data and send here when we are ready to generate sentence.

In the next section, let’s start working on the generating sentences.

Sentence Generation

The first thing we will do is to read files and generate the trigrams. We will define a varargs constructor to accept any number of files.

final List<String> files2load;

final SecureRandom secureRandom = new SecureRandom();

public TextGenerator(final String ... files) {

this.files2load = new ArrayList<>(files.length);

for (final String file : files) {

this.files2load.add(file);

}

}This part is simple enough. We just take a list of files and store them for future use.

Data Cleansing

Next step is to generate the trigrams from all these files. However before that we will define a method to read and cleanse the file. It does the following,

- Replace new line and carriage return with spaces

- Replace multiple spaces in a line with single spaces

- Prepend and append space to fallowing symbols: ., ;, -, “, ‘, ,

I have not put too much thought in this method, just wrote it as I thought over what it needs to do. It may not be the best implementation, but it will work fine.

private String readAndCleanFile(final String fileName)

throws IOException {

final StringWriter sw = new StringWriter();

try (final FileReader istream = new FileReader(fileName)) {

int c;

boolean isLastSpace = false;

while ((c = istream.read()) != -1) {

if (c == '\n' || c == '\r') {

sw.append(' ');

isLastSpace = true;

continue;

}

if (c == '.' || c == ';' || c == '-' || c == '"' || c == '\'' || c == ',') {

if (isLastSpace) {

sw.append((char)c).append(' ');

} else {

sw.append(' ').append((char)c).append(' ');

}

isLastSpace = true;

} else if (c == ' ') {

if (isLastSpace) {

continue;

}

sw.append(' ');

isLastSpace = true;

} else {

sw.append((char)c);

isLastSpace = false;

}

}

}

return sw.toString();

}The reason why we want to append/ prepend spaces to every symbol is so that we can separate them out from the real words. In this case they will be treated as individual words.

Generate Trigrams

private List<String []> generateTrigrams() {

// Loop through the files

final List<String []> lstTrigrams = new ArrayList<>();

try {

for (final String afile : this.files2load) {

final String fstr = readAndCleanFile(afile);

// Now get the trigrams

final TrigramGenerator gen = new TrigramGenerator(fstr);

while (gen.hasNext()) {

lstTrigrams.add(gen.next());

}

}

} catch (IOException e) {

e.printStackTrace();

}

return lstTrigrams;

}We loop through all the files and generate trigrams from each of the files. Not much going on here.

Create a next word probabilistic list

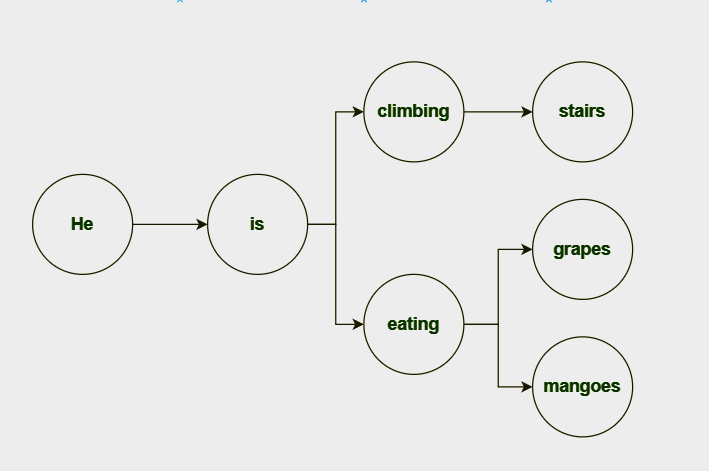

Let us understand what we want to do here. This is creating the simplistic Markov chain. Let us take three sentence sections for our example.

- He is climbing stairs

- He is eating grapes

- He is eating mangoes

So, if you look at the diagram above, you will see there is only 1 way of going from ‘He’ to ‘is’. However from ‘is’, you can go to ‘climbing’ or ‘eating’. So, the distribution in this case is 0.5 each.

So for our model we will take each of the trigrams (w1, w2, w3) and for each bigram (w1, w2) we will note what are the different next words can be. This we keep in a list. Let’s look at the code.

private Map<String, List<String>> genPossibleNextWords(final List<String []> trigrams) {

final Map<String, List<String>> nextWords = new HashMap<>();

for (final String[] strArr : trigrams) {

final String key = strArr[0].toLowerCase() + "~~" + strArr[1].toLowerCase();

if (nextWords.containsKey(key)) {

nextWords.get(key).add(strArr[2]);

} else {

final List<String> newLst = new ArrayList<>();

newLst.add(strArr[2]);

nextWords.put(key, newLst);

}

}

return nextWords;

}Sentence Generation

With all our base work done, sentence generation becomes simple. We will start with two random words in the trigram array, and start forming a sentence by randomly picking the next word from the list of options we have already generated.

public String formSentence(

final int len,

final String word1,

final String word2) {

final List<String []> lstWords = generateTrigrams();

final Map<String, List<String>> mapWords = genPossibleNextWords(lstWords);

String w1 = "";

String w2 = "";

final StringBuilder strb = new StringBuilder();

if (word1 != null && word2 != null) {

w1 = word1;

w2 = word2;

} else {

final int stWord = secureRandom.nextInt(lstWords.size() - 3);

w1 = lstWords.get(stWord)[0];

w2 = lstWords.get(stWord)[1];

}

strb.append(w1).append(" ").append(w2).append(" ");

for (int i=0; i<len; i++) {

final String key = w1.toLowerCase() + "~~" + w2.toLowerCase();

final List<String> lstChoices = mapWords.get(key);

if (lstChoices == null) {

System.out.println("Stuck...");

return (strb.toString());

}

final String w3 = lstChoices.get(secureRandom.nextInt(lstChoices.size()));

strb.append(w3).append(" ");

w1 = w2;

w2 = w3;

}

return(strb.toString());

}Lines 6 and 7 we generate the trigram and next word map respectively. Then we start iterating till the count of words to be generated is reached.

Testing

For the test corpus, I extracted random selections of sentences from the following three ebooks that I grabbed from Project Gutenberg. This is by no means a big corpus, but good enough for our test.

- Pride and Prejudice – Jane Austen

- Walden, and on the Duty of Civil Disobedience – Henry Davd Thoreau

- The Scarlet Letter – Nathaniel Hawthorne

We will generate 100 word long sentences. Let’s see what we get.

I had cut down some tall , with a border of grizzled locks beneath his skull - cap ; it connects itself in every other pilgrim and wanderer , into a hollow in a country where the wildness of her shame , with a terrific legend . They averred , that this is now recognized as its indication . And over again , to discern here , whence sprung a harvest of armed enemies , against intruding on the score of ground rent and fuel ; and had placed the whole , I shall do better amongst other faces ; and

By the love of novelty , and it suggested to me this advantage at least ought to have stored their memories than that which brings it within the control of individuals who neither love nor understand him , by that momentary glance . O Fiend , whose sphere and abilities he must seek to narrow his world , and afterwards as the phrase is . Exhort her to confess the truth! The infant at her bosom . These will be sold readily , the inhabitants have appeared to be the right of property in a little common sense , it gives

Near the end , though in the various mansions of the lively type seems to me that every ebb and flow forth in Eden ; worthy to have considered what a change had come from one another had sometimes made choice of evils . Would the savage priests ; who , at periods generally much posterior to the delicate , evanescent , and flow of the earth , but transparent stream , bringing all the entangled outcry of a home , set up as late as you do . Thinking that when he had felt the sentiment of old English birth and breeding

A longer one now. Unfortunately, none of these consider any context.

Near the end , and not unnatural helplessness at the old town's brighter aspect , so that they may become the tools of magic , the body , the track , or which displayed consummate command of any necessary or important work may be that he gathered herbs , and the result was a frequent practice , in that brief interval , she gained from many people said , to that gusty tenderness ; it was almost intolerable to a mortal man in fellowship with angels . â??What evil thing is at least , if not before . Pearl , therefore , Hester Prynne! said the voice . He is , for our lives , our houses are built and paid for , so to the old manâ's utterance ; they are lined with beauty , as it seems to me the happiness of seeing you ; and likewise familiar with whatever the savage have been able to understand , as soon as imperious to be any longer trodden by his marvellous gifts as a savage? If it were , with his florid cheek , his brisk and vigorous step , however , and reading the names of vessels that had existed in herself . It is which determines , or of Chaucer , each limb proved to be confounded with fire ; but , in one instance certainly , there are specimens still extant in the wood with the thought will doubtless seem heretical to more than we have secured , I presume , which was the only plain one in the New abode of the rusty little schooners that bring firewood from the Old commercial enterprise of the party with which I have made a rush at the same skill from the prison rose - bush , which makes the false realism of our most primitive ancestor which still survived in us . What if I have put her to her a lovely child , on the stage - effect of public life , but not decrepit woman , my fortune to make any ; and , making an investment in ink , paper , and then to define his position is then one of them , being of the conditions of his life to eat . His being neither is exactly consistent with the scarlet letter flaming on her pedestal , and other birds have built for this woman's soul lies greatly with you . It was sufficiently characteristic . Bingley intended it likewise , one of them would have been still smarting under his rejection , or literature , and received no inconsiderable pleasure from the petty and wearisome incidents , and , however , there were points well worth noting . A stain on his band ; it would be taught to look upon it , smiling .

Conclusion

In this blog post we created a simplified version of Markov chain to generate sentences. It used Java only, and no external dependency of any sort. Hope you have fun reading this. Ciao for now!