AI prompting represents both an art and a skill. To elicit accurate results from a large language model (LLM), it is essential to formulate the appropriate questions. Before we delve into the topic of Agentic, let us take a moment to explore the intricacies of effectively prompting an LLM. We will see how LLMs interpret prompts and then we will see a few techniques of prompting. So, let’s start and enjoy your stay here.

How does LLM process prompts?

The first thing we need to understand is that LLM is not intelligent. The magic happens when LLM is just predicting the best fit next token. It uses attention to find what is the token that is most important. Then it goes through all its learning and just spits out the most probable next word. Let’s go over the steps in brief.

Break text into tokens and convert to numeric value

As we have learned before LLM works on tokens. As a first step the prompt that is sent is broken down into equivalent tokens. These tokens are converted into the equivalent numeric values that will be same as what had been created during training.

Read all the tokens in one go

Now that we have a vector of tokens, all of them are read. The maximum number of tokens that can be read is defined by the context size for the model.

Pass to Attention layer and get next token

Now that we have all the tokens read, they are passed to the trained attention layers. It tries to retrieve the context and relations between words and finally suggests what can come as the next word. All these are just a type of pattern recognition and word prediction. This step keeps on repeating and next word predicted till LLM encounters size limitations or stop words.

If all of these feel too technical, think about it this way. You go to the library and want to find a book on Theory of Relativity. You know this will be filed under Technical, then Science and within it Physics. So, we go to the filing section in that order. We find Technical cabinet followed by Science and then finally Physics. In here we will find by Author Name. So, think about each of these small bits of information as tokens. If you know the tokens, you can directly navigate by going through the relationship (defined by attention layers). However, if you know it belongs to Science, but not that it is Physics, your only option would be to go through all Science stream books. This can get you wrong book as well in some cases.

LLM works the same way. The more information you provide, the less it hallucinates. All of these tokens are aligned in vector space using their semantic meanings. There can be more than one relation that will be stored between the tokens. So more the relevant tokens provided in the prompt, the easier it is for LLM to traverse vector path and get the next relevant token.

Types of Prompting

Let’s see some basic types of prompting. These are very simple rules that help models to provide output in a specific way.

Zero Shot Prompting

Most users are accustomed to zero shot prompting. It works very well when you are asking a question that is part of LLMs learning corpus and it can just keep on predicting next token. “Why is the sky blue?”, “Why do mosquito bites sting?” – questions like this are considered “zero shot prompting”. In this case we are not providing any examples to the model to respond to the question.

One Shot Prompting

In case of One Shot prompting we are providing the model with one example for the response. Let’s see an example.

Classify the following as Positive or Negative.

Q. This product is wonderful and meets all expectations.

S. Positive

Q. Seems like it’s going to rain and we cannot go out.

S.

Negative

In this case we just gave an example and then asked a question and put the tag to have the model infer what we want. Model responded similarly with the correct sentiment.

Few Shot Prompting

Few Shot Prompting also provides example to the model. However, in this case we provide more than one samples/ examples. Let’s take one example of this as well.

Ruby: a precious red gemstone, a variety of corundum, known for its hardness and vibrant color, which is caused by the presence of chromium.

Opal: a unique gemstone known for its vibrant play of colors, formed from a hydrated amorphous silica structure that diffracts light.

Lapis Lazuli:

A semi-precious stone recognized for its deep blue color, flecked with gold pyrite and white calcite. It is a metamorphic rock composed primarily of lazurite, along with other minerals like sodalite, calcite, and pyrite. Its unique color and patterns have made it a prized material for jewelry and art throughout history.

In this case I never said what I wanted. But the model looked at the examples and understood that I was describing the stones. So, it did the same for Lapis Lazuli as well.

Chain of Thought Prompting

This one is interesting. This kind of prompting is used where you are trying to get the model to do something complicated and the model is not normally trained for it, or cannot do it well. You will provide an example where you break down how you will arrive at the solution. Model will try to replicate the same. Let’s see a simple example.

Roger bought 20 pencils. He broke 2 pencils, went to the store and got 5 more. How many pencils does he have now?

Answer is 23.

————————

Now answer this:

Binny had 42 chocolates. He ate 12 of them. His friend Kapil gave him 10 more chocolates. How many chocolates is Binny left with?

Binny was left with 42 - 12 + 10 = 30 chocolates

Well, models are normally not good as a calculator. So, in this case even though it did understand the problem, answer of course in incorrect. Let’s see if we can fix this using Chain of Thought prompting.

Roger bought 20 pencils. He broke 2 pencils, went to the store and got 5 more. How many pencils does he have now?

Answer: Roger started with 20 pencils. He broke 2. So, he is left with 20-2=18. He got 5 more. So, now he has 18+5=23. This is the final answer.

————————

Now answer this:

Binny had 42 chocolates. He ate 12 of them. His friend Kapil gave him 10 more chocolates. How many chocolates is Binny left with?

Sure! Based on what you've provided, we can calculate the final number of chocolates Binny has as follows: Initially, Binny had 42 chocolates. He ate 12 chocolates, so he was left with 42 - 12 = 30 chocolates. Then his friend Kapil gave him 10 more chocolates, so he now has a total of 30 + 10 = 40 chocolates. Therefore, Binny is left with 40 chocolates after eating some and receiving additional gifts from Kapil.

Glad to help Mistral. That is the right answer!

System Level Prompting

System Level or Developer Prompting is normally used from applications. However, some tools for running models do give this feature to you. It helps initialize the model with a role or character. You can say something like “You are a civil engineer very efficient in designing bridges”, Now anytime you provide additional prompts, this model will always consider itself to be a “civil engineer” with experience of building “bridges”. There is no magic to it. Remember from a model perspective everything is a token. Imaging the model has 50 million tokens, out of which there are 5 million that have relationship with the two descriptions we provided. So, we are setting the model to a smaller subset of corpus to give better and meaningful responses.

Role Based Prompting

The example we gave under System Level prompting is an ideal example of Role Based prompting. Here we are trying to give the LLM a specific personality. “You are a software developer with 15 years of experience” or “You are an experienced meteorologist” – these are examples of assigning specific personalities to the model.

Task Decomposition Technique

In this type of prompting, we break down the central idea for LLM to process smaller chunks of request. This will help it ti generate better responses.

Write a scientific paper on using solar energy. Split it in following tasks,

Topic 1: Discuss about the current challenges of electricity

Topic 2: Discuss about Solar energy and progress

Topic 3: Add how solar energy can offset the problems of electricity shortage

Now LLM will expand on these sub topics to come up with the full document.

Other Prompt Frameworks

We have been talking about some basic techniques of prompting. There are other similar ones, but at this time we will switch gears and will define some other frameworks that will help in getting better response.

RTF

Role – Task – Format

This is a very simple framework for enhancing prompting.

- Role – This defines the persona that the model should assume. For example you can ask the model to assume the role of a “travel agent”.

- Task – Here we will define what we want the model to help us with.

- Format – What is the response format?

Here is a basic sample request.

{Role} You are an experienced travel agent. {Task} Provide me a 5 day itinerary for a trip in Paris. {Format} Output format should be a JSON list.

TAG

Task – Action – Goal

This is great while you are trying to research ways of solving a specific problem.

- Task – What is it that you are trying to achieve?

- Action – What is an approach that you are looking for?

- Goal – What is the final target that needs to be achieved.

Here is a basic sample.

(Goal) I have a website and want to increase SEO rating {Task} by using appropriate AI tools. {Action} Provide me a bulleted list of items that I can do.

RAILS

Role – Audience – Intent – Language – Structure

RAILS is a nice framework that can be used to build speeches and documentation. The other framework that is very much similar is CRISPE that we will see later. RAILS may be used when a specific tone or style of speech is desired from the model.

- Role – Similar to others, this is the persona

- Audience – This sets what kind of response to generate. Response given to a 6th grade kid will be different from that can be given to 12th grade.

- Intent – Outcome that we are looking for.

- Language – This additionally sets the tone of how to form the response. Will it be professional or blog post like.

- Structure – How to format the response.

Here is an example for generating a speech that talks about a imaginary robotic company.

{Role} You are a project manager of Brainy Robotics working on a project trying to build humanoids. {Audience} You are addressing a group of elite scientists who are looking to get more information on current state of affairs. {Intent} Create a detailed speech for delivery. Currently the robots (code named ZB-1) are built successfully and can move around and do basic human like task. The future plan is to build even better robots that can independently work and be part of the society. Think about "I, Robot” by Isaac Asimov when planning for future state. {Language} The speech should be professional and can be technical. {Structure} Structure the speech as Current State -> Future State -> Action Item -> Conclusion.

GRASP

Goal – Role – Audience – Style – Process

GRASP is similar to RAILS that we have seen above. It gives you same flexibility in a different format. Where GRASP differs is its focus. Whereas RAILS focuses more on the conversation aspect, GRASP focuses more on the Goal driven aspect.

- Goal – What is the purpose of this? What do we want to achieve?

- Role – What is the persona of the model?

- Audience – Who are we addressing?

- Style – What would be the tone of this response. Will it be playful or formal.

- Process – Provide model with steps that it can act on.

Again let’s see an example.

{Goal} A group of teenagers are trying to sell lemonade from a temporary lemonade stand. The proceeds will benefit a local food bank. We are trying to create a 200 words marketing brochure to sell the event. {Role} Act as a expert in creating advertising and marketing materials. {Audience} You are addressing regular people of different age groups that may buy lemonade from you. They may come from different age group or sex. {Style} The style of the brochure should be humorous and suitable for all age group. {Process} Insist that every sale will benefit the local food store. Add that lemonade is a healthy drink. State that audience is benefitting the society while enjoying a cool drink.

CRISPE

Context – Role – Instructions – Steps – Parameters – Example

We have already seen RAILS above. CRISPE extends the framework to create more complex requests that can cater to more specialized content generation.

- Context – Background information for the output.

- Role – What is the persona of this model?

- Instructions – Clear instructions of is expected off the model.

- Steps – Break down the process in individual steps that this model should carry out.

- Parameters – Specify the boundaries, constraints and any quality aspects.

- Example – An example for the output format expected.

Let’s take an example of CRISPE prompt.

{Context} We are a book publisher. Currently we publish books mostly in paper format. Our annual sales are steadily declining because of two reasons. 1) Piracy of material 2) Readers switching to eBooks. {Role} Act as a senior consultant who has experience in reviving companies by providing solutions. {Instructions} Produce an action plan that can create an increase in revenues. Also plan a short and long term goal for revenue increase. {Steps} Deliver these sections. 1) Findings 2) Mitigation Stategies 3) Further Recommendations for Revenue Growth. {Parameters} All actions must show results starting 6 months. Include plans with 6 months increment with achievable goals and include expected percent impact on revenue. {Example} Structure as a business report suitable for executives of the company.

Conclusion

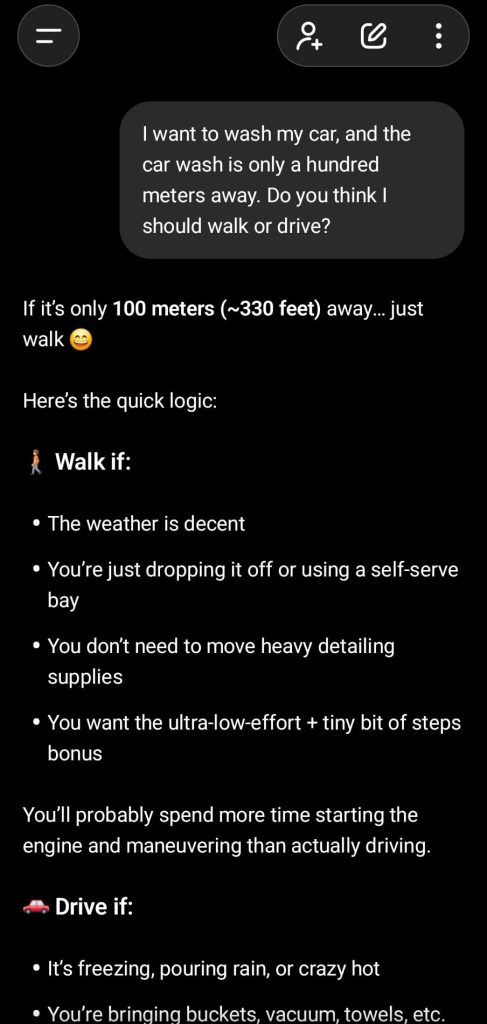

Here’s a nice little AI stuff that I received in WhatsApp. It humorously presents how AI can respond to ambiguous prompts.

We just documented a few of the large library of recommendations for prompting. Adding any of the frameworks to your workflow will ensure consistent and better outputs from the models that you are working on. Good prompting has a lot of thought that goes behind it. If you do not understand the problem, you cannot explain it to the model. So, even before starting to write a prompt, it is always helpful to understand what is exactly required.

Ciao for now!